What is request coalescing?

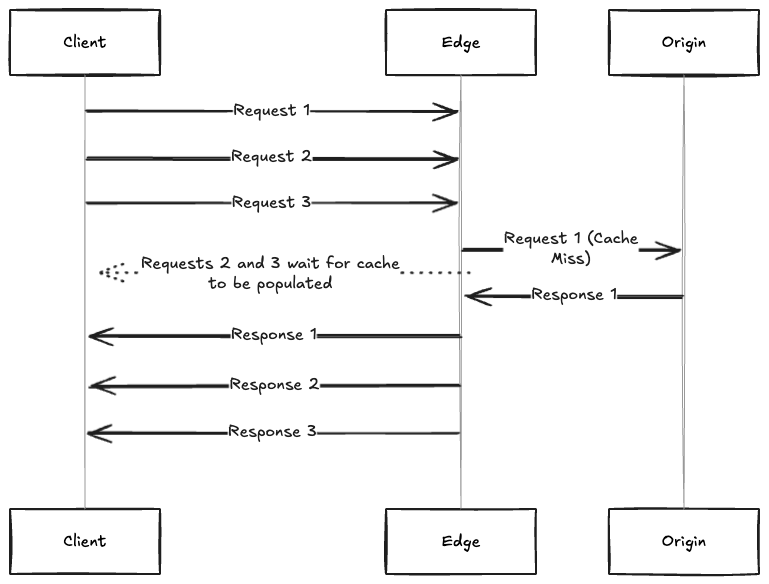

Request coalescing is a mechanism commonly used in edge caching. It prevents simultaneous requests from hitting the origin at the same time by allowing only one request to populate the edge cache with the response from the origin. All other requests wait until the cache is populated, and then serve the response to the client directly from the cache. This significantly lowers the load to the origin for cache MISS requests, which can help manage the traffic spikes on your site.

Nginx allows enabling request coalescing through the proxy_cache_lock directive, which has some drawbacks mentioned below.

Request locking with proxy_cache_lock

proxy_cache_lock

When enabled, only one request at a time will be allowed to populate a new cache element identified according to the proxy_cache_key directive by passing a request to a proxied server. Other requests of the same cache element will either wait for a response to appear in the cache or the cache lock for this element to be released, up to the time set by the proxy_cache_lock_timeout directive.

— https://nginx.org/en/docs/http/ngx_http_proxy_module.html#proxy_cache_lock

Due to Nginx’s event loop nature, proxy_cache_lock locks the resource, and all other requests must periodically check if the lock has been released before they can access the cache and respond to the client. The lock is global, not at the worker level, as some sources claim, so even with multiple Nginx workers, only one request reaches the origin for a given proxy_cache_key.

Locking is skipped for requests that have proxy_cache_bypass or proxy_no_cache set.

The lock’s release check is done only every 500ms and cannot be configured. In a worst-case scenario, simultaneous requests that get locked get 500ms added to their response times, as shown in the image below. In the example, the first request locks the resource, so the other two simultaneous requests wait for the lock to be released. The origin responds after 100ms, so the response time for the first request is 100ms, while the other two requests have to wait an additional 400ms to check if the lock is still present. The fourth request reached the server just before the lock was removed, so it had to wait 500ms to check the lock and respond.

Sometimes, this is better than having all requests reach the origin, especially when the origin is slow. However, it can be problematic for fast websites, as the increase in response times lessens the benefit.

Patching Nginx to configure lock release wait time

In March, I opened a patch to Nginx to make the setting configurable with the proxy_cache_lock_wait_time directive, but I haven’t heard back. If you build Nginx from the source, you can still apply that patch and use the directive to set a lower lock wait time.

I ran a simple test where 10 requests hit an uncached resource simultaneously. The first column represents results on Nginx without proxy_cache_lock enabled. The second column sets proxy_cache_lock_wait_time at 10ms, the third column at 50ms, and the last column has it at the default 500ms setting. It is clear from the test how the wait time affects the Time To First Byte (TTFB).

| No lock | 10ms lock wait | 50ms lock wait | 500ms lock wait | |

| min TTFB | 6.45 ms | 6.93 ms | 6.74 ms | 6.3 ms |

| max TTFB | 9.4 ms | 11.2 ms | 54 ms | 501.67 ms |

| avg TTFB | 8.3 ms | 10.1 ms | 48.08 ms | 349.8 ms |

| p95 TTFB | 8.8 ms | 10.98 ms | 53.07 ms | 501.34 ms |

| Requests to origin | 10 | 1 | 1 | 1 |

Prevent locking cache bypasses with hit-for-pass

Another drawback of proxy_cache_lock is that it locks all cache miss requests without an option to exclude some requests from the lock.

For example, suppose you want to bypass the edge cache for all requests from the origin that contain an X-Cache-Bypass response header. In the phase where proxy cache lock gets applied, Nginx cannot know that a request will bypass the cache, as it doesn’t know what headers the origin will send. Because of that, it will lock all requests.

This is expected, as Nginx doesn’t see the future to determine if the cache will be bypassed, but some other tools have a solution for this problem. Varnish implements a hit-for-pass mechanism, which essentially caches the fact that the request shouldn’t be cached.

Hit-for-pass marks an otherwise cacheable resource as uncacheable for a specific time. Whenever a request for the resource comes in, it bypasses the cache and goes straight to the origin without any locking.

If you’re using Openresty’s lua-nginx-module, you can implement this using shared memory dictionaries. Based on response parameters, you can store the cache keys for resources that bypass the cache in the header_filter phase. Then, in the access phase, you check if the resource is marked as hit-for-pass and bypass the cache if it is. Remember, bypassing the cache prevents proxy_cache_lock from turning on, allowing requests to go directly to the origin.

Below is Lua pseudocode implementing a hit-for-pass mechanism for Nginx.

lua_shared_dict hit_for_pass 100m;

header_filter_by_lua_block {

local function is_uncacheable()

return ngx.header["X-Cache-Bypass"] ~= nil

end

if is_uncacheable() then

local cache_key = ngx.md5(ngx.var.proxy_cache_key)

ngx.shared.hit_for_pass:add(cache_key, 1, 10)

end

}

access_by_lua_block {

local cache_key = ngx.md5(ngx.var.proxy_cache_key)

local hfp, _ = ngx.shared.hit_for_pass:get(cache_key)

if hfp then

ngx.var.lua_cache_bypass = 1

end

}

proxy_cache_bypass $lua_cache_bypass;

proxy_cache_key $proxy_cache_key;

Request coalescing is an essential mechanism in edge caching that can significantly reduce the load to your origin and increase response times. It has pros and cons, and some implementations are better than others. The Nginx implementation can be suboptimal, mainly because it adds 500ms to each locked request response time. While still not ideal, my Nginx patch makes the feature configurable and lessens the downsides, so I hope it will be included in one of the future Nginx versions.

Leave a comment